Containerization simplifies the process of building, testing, and deploying applications, enabling teams to work more efficiently and effectively. By reducing the risk of compatibility issues, optimizing resource utilization, and improving security, containerization has become an essential tool for modern software development and DevOps.

In this blog post, we will dive into the world of containerization with Docker. We will explore the advantages and disadvantages of Docker, its architecture and components, and the basics of working with Docker commands.

What Is Containerization of Applications?

Containerization of applications is the process of packaging an application and its dependencies into a self-contained unit called a container, which can then be run consistently and predictably across different environments, regardless of the underlying infrastructure.

Containers provide a lightweight and efficient way to isolate applications and their dependencies from the host system and other containers, improving portability, scalability, and security. Containerization is becoming increasingly popular in software development and DevOps as it enables teams to build, test, and deploy applications more quickly and effectively.

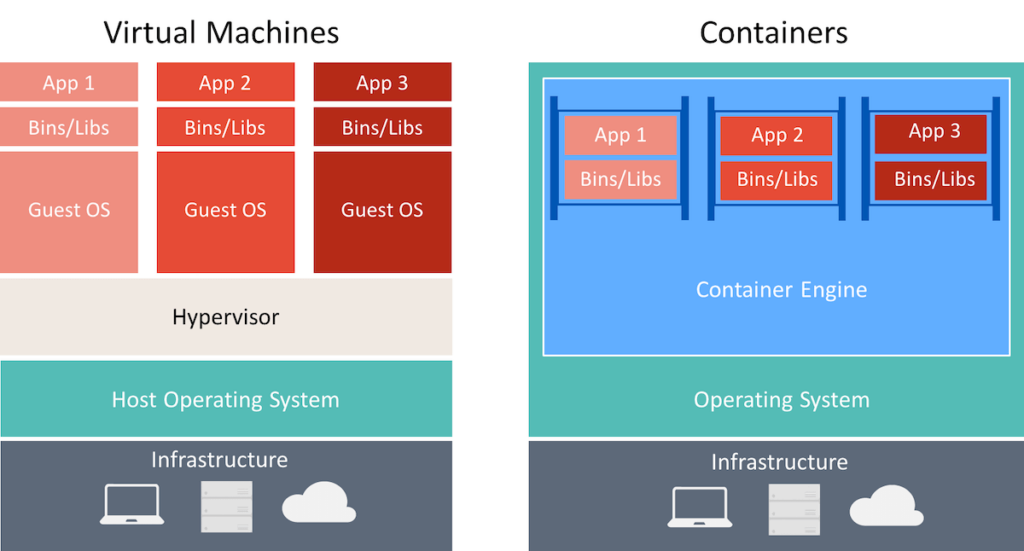

Virtual machines emulate a complete hardware environment while containers share the same operating system as the host machine, providing isolated runtime environments for individual applications.

Virtual machines are typically larger and more resource-intensive than containers, as they require their own virtual hardware and operating system. Containers are much smaller and can share resources with the host machine, making them more efficient and lightweight.

What is Docker?

Docker is a containerization platform that provides a way to package and run applications in isolated environments called containers. It allows creating, deploying, and running applications across different environments, making it easier to build, test, and deploy applications quickly and efficiently.

Docker uses container technology to isolate applications from their host environment and ensure consistency across different deployment environments.

Docker Architecture:

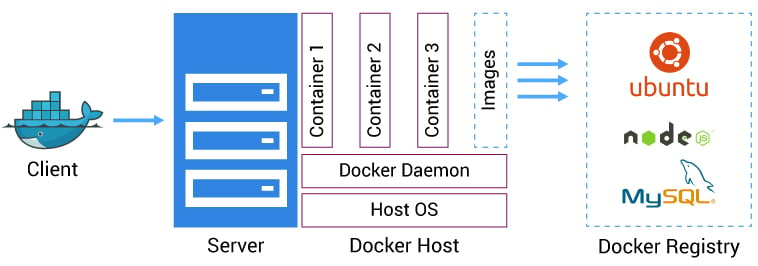

The Docker architecture consists of a client-server model with the Docker daemon, the Docker client, and the Docker registry working together to build, ship, and run containers.

Docker Engine: This is the core component of Docker that provides the runtime environment for containers. It includes a server that runs as a daemon process, a command-line interface for interacting with the daemon, and an API for integrating with other tools and systems.

Docker Registry: This is a centralized repository that stores Docker images, which are pre-built container images that can be used to create new containers. The Docker Hub is the official Docker registry, but there are also private registries that can be used for storing and sharing custom images.

Docker CLI: This is the command-line interface that developers use to interact with Docker. It provides a set of commands for building, running, and managing containers and other administrative tasks such as managing Docker networks and volumes.

Docker Compose: This tool defines and runs multi-container Docker applications. It allows developers to specify the dependencies between containers and configure the environment variables, volumes, and networks needed to run the application.

Docker Swarm: This is a container orchestration platform that allows developers to manage and scale multiple Docker containers across multiple hosts. It provides a simple way to deploy and manage containers at scale, with features such as load balancing, service discovery, and rolling updates.

Advantages of using Docker:

Portable: Docker provides a standardized way to package and run applications, making it easy to move applications across different environments.

lightweight: Docker containers are and consume fewer resources than virtual machines, enabling developers to run more applications on the same infrastructure.

Isolation: Docker containers provide a high level of isolation between applications, which improves security and stability.

Flexible: Docker allows developers to quickly build and deploy applications in a variety of programming languages and frameworks.

Scalable: Docker makes it easy to scale applications horizontally by adding or removing containers as needed.

Limitations and disadvantages of Docker:

Complexity: Docker introduces additional complexity into the development process, and developers may need to invest additional time and resources into managing and troubleshooting Docker containers.

Performance overhead: While Docker containers are more lightweight than virtual machines, they still introduce some performance overhead compared to running applications natively on the host.

Networking challenges: Docker introduces some challenges around networking, particularly when containers need to communicate with each other or with external services.

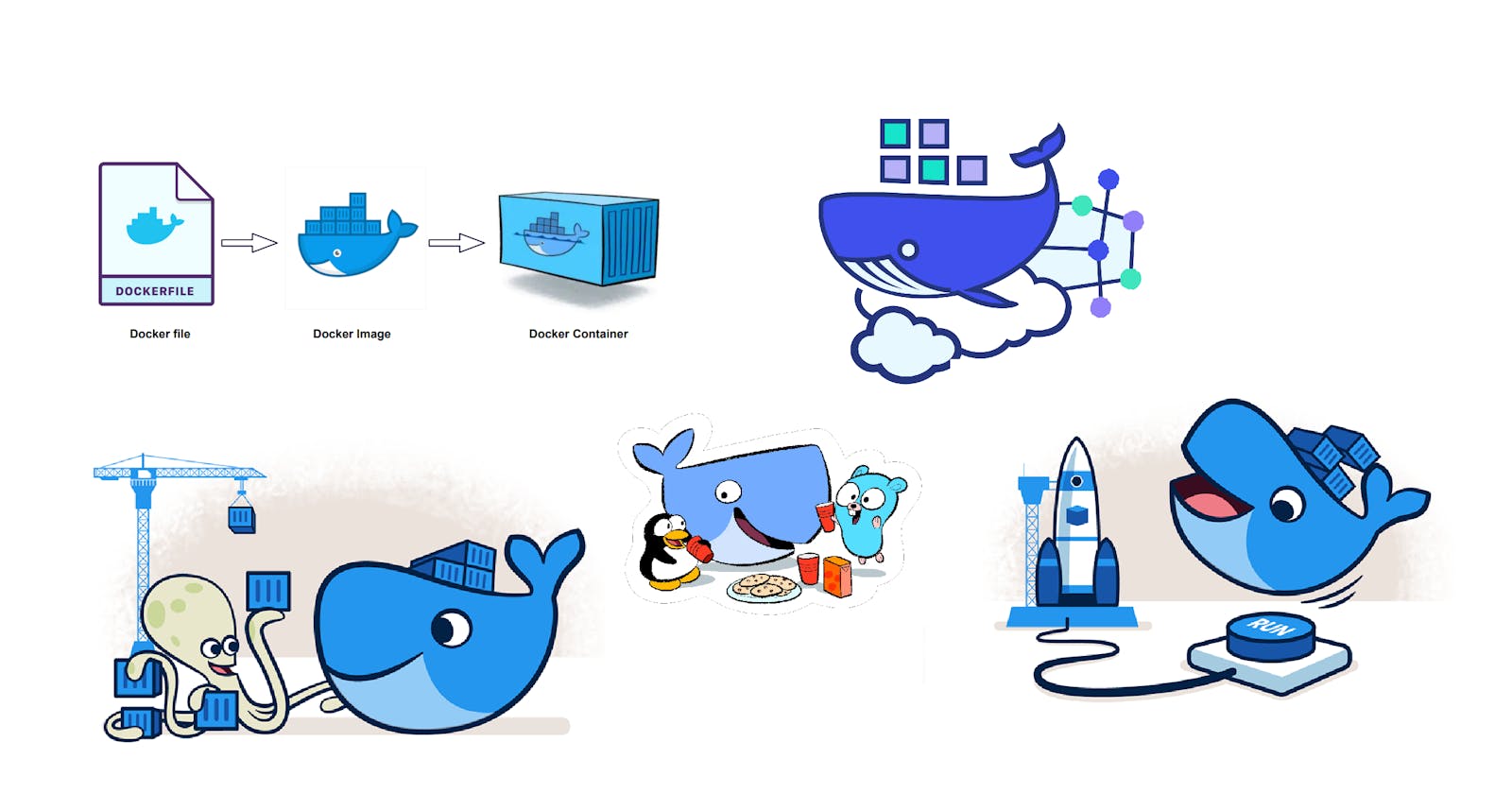

Docker Image Vs Docker Containers:

Docker Image is a lightweight and executable package that includes everything needed to run an application, including the code, runtime, libraries, and system tools. There are three ways to create a Docker image:

Building an image from a Dockerfile

Committing a container to an image

Importing an image from a Docker registry.

Docker Container is an instance created from the Docker image and is designed to run a single process or application. Containers can be easily managed, moved, and scaled, making them an ideal solution for modern application deployment.

Docker Installation and Commands

Please check this Install Docker Engine | Docker Documentation to install Docker on your host system.

Some important Docker commands:

docker search [image name]: This command is used to list only running Docker containers.docker run [image name:tag]: This command is used to create and start a new container from a Docker image.docker ps: This command is used to list only running Docker containers.docker ps -a: This command is used to list all Docker containers.docker images: This command is used to list all Docker images that are currently available on the local machine.docker pull [image name:tag]: This command is used to download a Docker image from a registry.docker loginThis command is used to authenticate with a Docker registry.docker push [image registry name:tag]: This command is used to push a Docker image to a registry.docker build -t [image tag/name] [path to Dockerfile]: This command is used to build a Docker image from a Dockerfile.docker exec -it [container ID] [command]: This command is used to execute a command inside a running Docker container.docker start [container ID]: This command is used to start a Docker container.docker stop [container ID]: This command is used to stop a running Docker container.docker attach [container id]is used to attach the terminal input and output of a running container to the current terminal session.docker port [container name]This command is used to display the public-facing ports of a container.docker rm [container1 ID] [container2 ID]: This command is used to remove one or more Docker containers.docker rmi [image1 ID] [image2 ID]: This command is used to remove one or more Docker images.

Let's have a Docker image

There are three ways to get a Docker image:

Importing an image from a Docker registry

Building an image from a Dockerfile

The following are some instructions that you can use while working with Dockerfile:

FROM: specifies the base image for the Dockerfile.MAINTAINER:sets the author field of the generated imagesRUN:executes commands in a new layer on top of the current image and commits the results.CMD:provides defaults for an executing container, which can be overwritten by command line arguments.EXPOSE:informs Docker that the container listens on the specified network ports at runtime.ADD:copies new files, directories, or remote files to the container.COPY:copies new files or directories to a container. By default, it copies as root regardless of USER/WORKDIR settings.ENTRYPOINT:configures a container that will run as an executable.VOLUME:creates a mount point for externally mounted volumes or other containers.USER:sets the user name for subsequent RUN/CMD/ENTRYPOINT commands.WORKDIR:sets the working directory.ARG:defines a build-time variable.

Committing a container to an image

Start the container: Start the container using the

docker runcommand, and execute any commands or make any changes that you want to save in the new image.

Check the container ID: Use the

docker pscommand to get the ID of the running container that you want to commit.Commit the changes: Use the

docker commitcommand to commit the changes to a new image.

What is Docker Volume?

Docker volume is a powerful feature that allows you to decouple your containers from storage, share volumes among different containers, and attach volumes to containers.

It is a directory that is declared as a volume in Docker. The volume can be created in one container and can be accessed even if the container is stopped or deleted. You can't create a volume from an existing container, but you can share one volume across multiple containers.

To create a volume, you can declare it in the Dockerfile by using the VOLUME instruction. For example, if you want to create a volume called "/myvolume1" in the Dockerfile, you can use the following instruction:

Dockerfile>>

FROM ubuntu

VOLUME ["/myvolume1"]

There are two ways to map a volume, either to a container or a host. When you map a volume to a container, you can specify the mount point inside the container. On the other hand, when you map a volume to a host, you can specify the directory on the host that you want to map to the container.

Create volume using commands and share with another container

docker run -it --name [container1 name] -v [volume name] [img name] /bin/bash

docker run -it --name [container2 name] --previliged=true --volumes-from [container1 name] [os image name] /bin/bash

Create volume in the host then map to container

docker run -it --name [cont-name] -v [/home/user/myvol:/volume-name] --previleged=true [img name] /bin/bash

Some Docker volume commands:

docker volume ls: This command lists all the Docker volumes that are currently available on the system.docker volume create [volume name]: This command creates a new Docker volume with the specified name.docker volume rm [volume name]: This command removes the specified Docker volume from the system.docker volume prune: This command removes all the unused Docker volumes from the system.docker volume inspect [volume name]: This command provides detailed information about the specified Docker volume, such as its mount point, labels, and driver details.docker container inspect [container name]: This command provides detailed information about the specified Docker container, including its IP address, exposed ports, and mounted volumes.

Docker Networks

Docker network is a virtual network infrastructure that enables the communication between different Docker containers, as well as between containers and the host system.

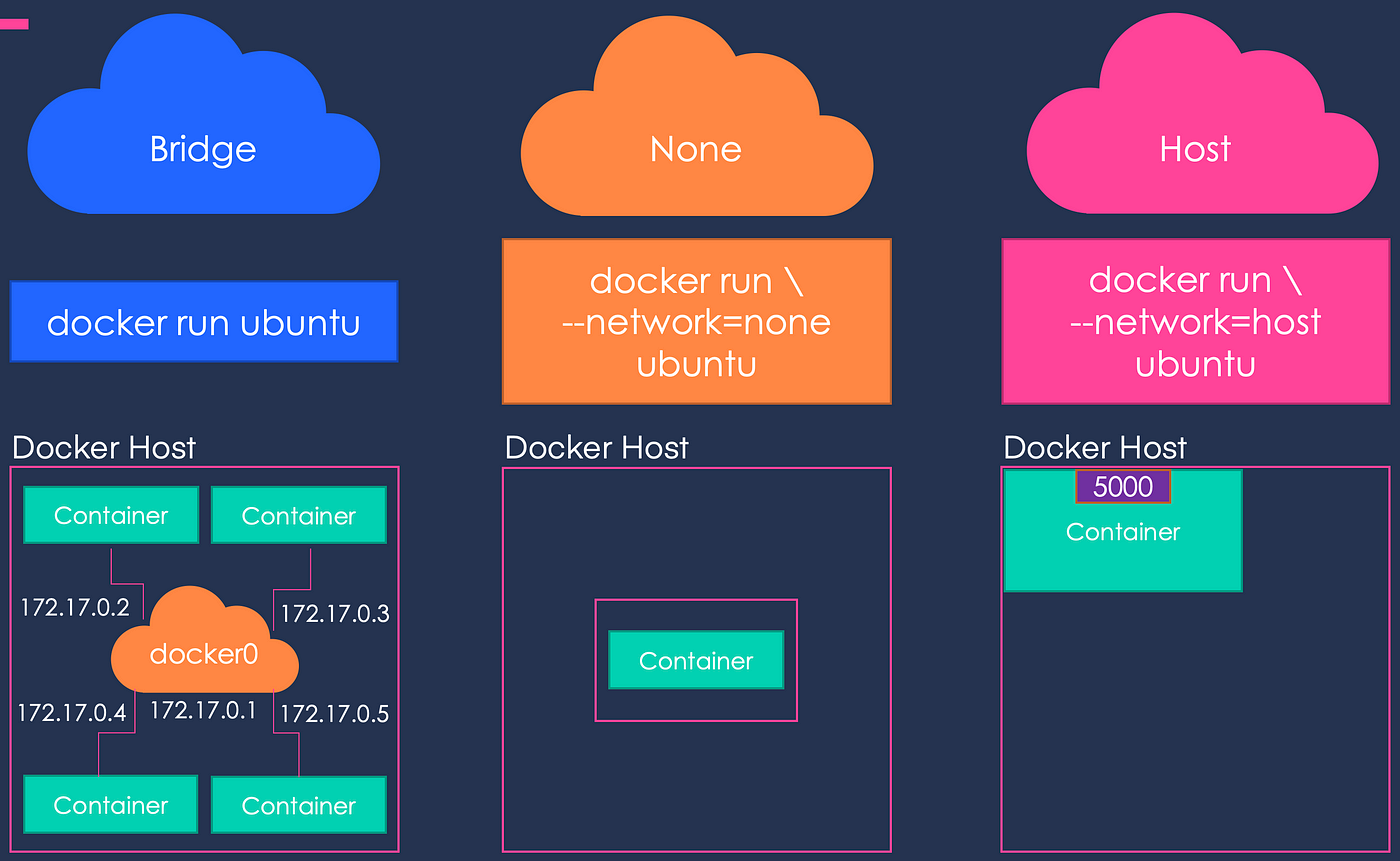

Docker provides three default networks when you install it:

Bridge network: It is the default network that provides IP connectivity to the containers running on the same Docker host. Containers attached to this network can communicate with each other using IP addresses.

Host network: It allows the container to use the network stack of the Docker host. Containers attached to the host network will have the same IP address as the host.

None network: It is a network that does not provide any IP address to the container. Containers attached to this network cannot communicate with other containers or the host. This network is mainly used for containers that do not require network access, such as batch jobs or database containers.

Docker Compose

Docker Compose is a tool that allows you to define and run multi-container Docker applications. It uses a YAML file to define the services, networks, and volumes required for an application and then creates and starts all the containers with a single command.

One of the main benefits of Docker Compose is that it simplifies the process of running multiple containers by automating the creation and management of the required resources. With Compose, you can define all the containers required for an application in a single file, and then use a single command to start and stop them.

Docker Swarm

Docker Swarm is a container orchestration tool that allows you to manage a cluster of Docker nodes as a single virtual system. It provides a way to deploy and manage Docker containers across multiple nodes, with built-in load balancing and automatic scaling capabilities. Docker Swarm makes it easy to deploy and manage complex applications that require high availability and scalability.

Some benefits of Docker Swarm include:

Scalability: Docker Swarm allows users to scale their applications horizontally by adding more nodes to the cluster.

High availability: Docker Swarm provides high availability for applications by ensuring that if a node fails, the containers running on that node are automatically restarted on other healthy nodes in the cluster.

Load balancing: Docker Swarm automatically load balances incoming traffic to the containers running in the cluster, ensuring that the load is distributed evenly across the available nodes.

Security: Docker Swarm provides security features such as encrypted communication between nodes and containers.

The main components of Docker Swarm include:

Service: A service is a definition of the desired state of a group of containers. It includes details such as the number of replicas of the container that should be running, the network ports that should be exposed, and the configuration of the containers.

Task: A task is a running instance of a container. Each task is assigned to a specific node in the cluster.

Manager node: The manager node is responsible for managing the overall state of the cluster. It schedules tasks, monitors the health of the nodes and services, and provides an API endpoint for controlling the cluster.

Worker node: A worker node is a node that runs the containers for a service. It receives tasks from the manager node and reports back the status of the tasks to the manager node.

Summary:

In this blog, we covered the basics of containerization with Docker, the Advantages and disadvantages of Docker, Docker architecture, the basics of working with Docker commands, Docker images, Dockerfile, Docker volumes, Docker networks, Docker-compose and Docker Swarm.

I hope you learned something today with me!

Stay tuned for my next blog on "Docker-Compose-Project". I will keep sharing my learnings and knowledge here with you.

Let's learn together! I appreciate any comments or suggestions you may have to improve my blog content.

Thank you,

Chaitannyaa Gaikwad