Getting Started with Kubernetes: A Comprehensive Overview for Beginners

Table of contents

- What is this container orchestration?

- Let's learn Kubernetes--->

- Why Kubernetes is so popular?

- Let's understand the Architecture of Kubernetes--->

- Let's explore Kubernetes Clusters--->

- Installation and setup of Kubernetes

- Applications deployment on Kubernetes

- Monitoring the applications on Kubernetes

- Summary:

Do you know, How Hotstar's live streaming of the Indian Premier League (IPL) cricket tournament or Amazon's e-commerce website on the annual Prime Day sale manages the high traffic coming from a huge number of visitors?

During the IPL season, Hotstar's live-streaming platform experiences a massive surge in traffic as cricket fans from all over the world tune in to watch their favorite teams compete. Managing such a huge traffic influx manually can be a daunting task. However, by using container orchestration tools like Kubernetes, Hotstar can easily scale its application up or down based on traffic demands, ensuring that every user gets a seamless streaming experience.

Similarly, Amazon's annual Prime Day sale is a major event that generates a tremendous amount of traffic on its website. To handle the traffic, Amazon needs to ensure that its website is highly available, scalable, and secure. By using container orchestration tools like Kubernetes or Docker Swarm, Amazon can easily manage its containerized application across multiple hosts, ensuring that the website is always available, and providing a seamless shopping experience for its customers.

What is this container orchestration?

Container orchestration is the process of managing and automating the deployment, scaling, and management of containerized applications. It involves using tools like Kubernetes, Docker Swarm, and Mesos to handle tasks such as load balancing, service discovery, and health monitoring, ensuring that containers are deployed efficiently and reliably across a cluster of hosts or cloud instances.

Container orchestration makes it easier to manage complex and dynamic applications, while also improving scalability, availability, and security.

Kubernetes has become popular due to its ability to automate the deployment, scaling, and management of containerized applications.

Let's learn Kubernetes--->

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications.

It was originally developed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF).

Kubernetes provides a platform for managing containers across a distributed infrastructure, allowing organizations to manage their applications at scale with ease.

It provides a wide range of features, including automatic load balancing and scaling, service discovery, rolling updates, self-healing, resource management, and configuration management.

Kubernetes is widely used in modern cloud-native architectures, enabling organizations to build and deploy applications faster and more efficiently.

Why Kubernetes is so popular?

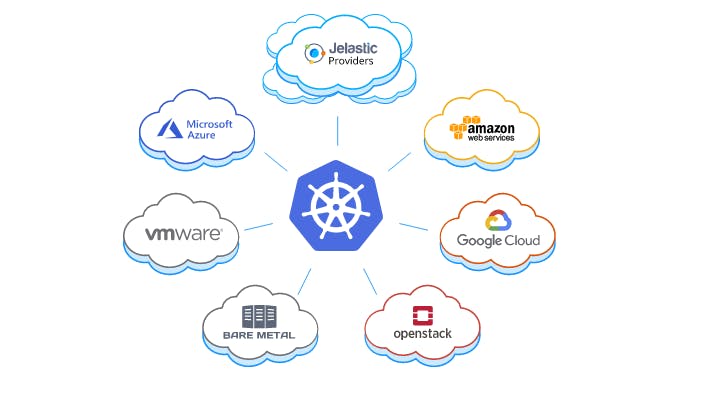

One of the key reasons for Kubernetes' popularity is its flexibility and scalability.

It can run on any infrastructure, from on-premise data centers to public cloud providers like Amazon Web Services, Microsoft Azure, and Google Cloud Platform.

Kubernetes also supports a wide range of container runtimes, including Docker, rkt, podman and containerd, making it easy to run and manage containers.

Another reason for Kubernetes' popularity is its rich set of features, including load balancing, service discovery, automatic scaling, rolling updates, and self-healing.

These features allow organizations to deploy and manage complex, dynamic applications at scale, without requiring manual intervention.

Let's understand the Architecture of Kubernetes--->

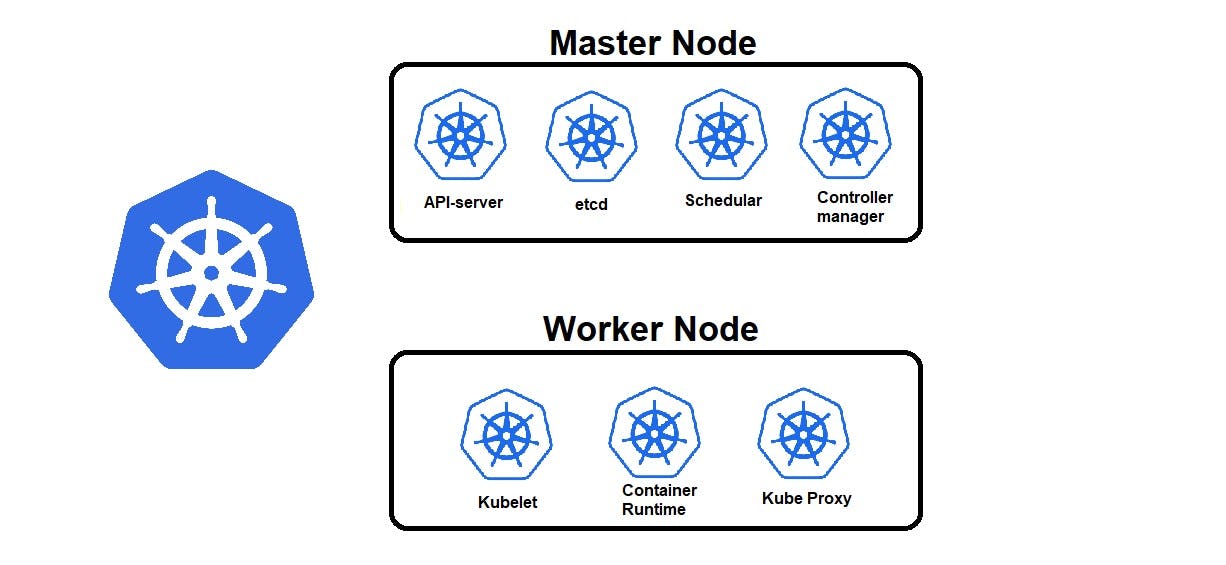

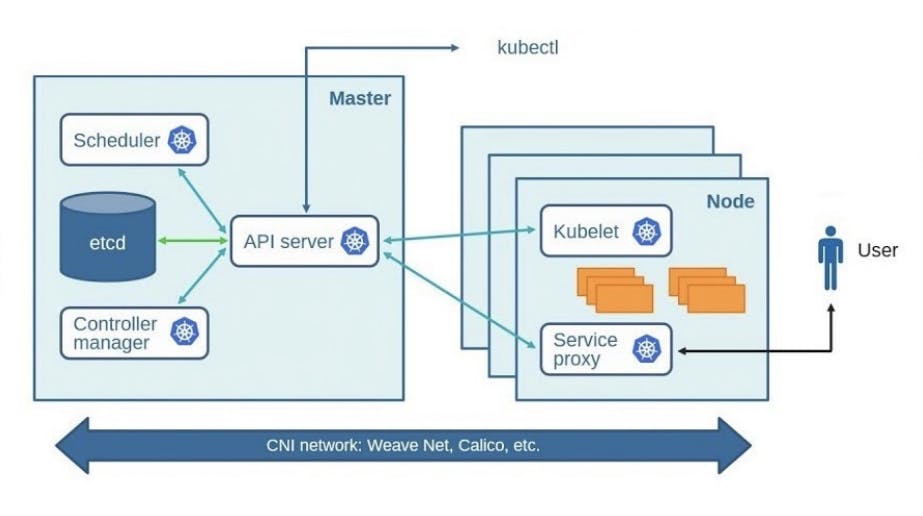

Kubernetes is a distributed system that is designed to manage containerized applications. It has a unique architecture that consists of a master node and multiple worker nodes [minions]. The collection of two or more nodes forms a Kubernetes cluster.

Let's take a closer look at each component of the Kubernetes architecture.

Master Node: The master node is the central control plane of the Kubernetes cluster. It is responsible for managing the overall state of the cluster and making decisions about how to deploy and manage containers. The master node consists of several components:

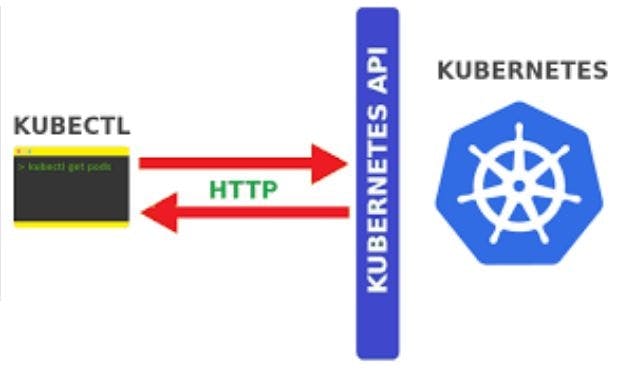

Kubernetes API Server: The API server is the primary management component of the Kubernetes cluster. It serves as the interface for all administrative tasks and provides a RESTful API that can be accessed by the other Kubernetes components.

Kubernetes Scheduler: The scheduler is responsible for placing containers onto worker nodes based on resource availability and other constraints.

Kubernetes Controller Manager: The controller manager is responsible for managing Kubernetes objects and controllers. It ensures that the desired state of the system is achieved and maintained.

The Kubernetes Controller Manager includes several controllers, and some of the most common ones are:

Node Controller: The Node Controller is responsible for monitoring the status of nodes in the cluster and managing the creation, deletion, and replacement of nodes. It ensures that the nodes are healthy and that the pods scheduled to run on them can operate as expected.

Replica Controller: The Replica Controller is responsible for ensuring that a specified number of replicas of a pod are running at all times. It monitors the status of the pods and makes adjustments as necessary to maintain the desired number of replicas.

Job Controller: The Job Controller is responsible for managing the execution of batch jobs. It ensures that the jobs are completed successfully and that the desired number of completions is achieved.

Deployment Controller: The Deployment Controller is responsible for managing the lifecycle of deployments. It ensures that the desired number of replicas of a pod are running at all times and that the updates to the deployment are rolled out smoothly.

etcd: It is a distributed key-value store that stores the configuration data and state of the Kubernetes cluster. It is used to ensure consistency and reliability across the cluster.

Worker Nodes: The worker nodes are the nodes in the Kubernetes cluster that run the containerized applications. Each worker node consists of the following components:

Kubernetes Proxy: The kube-proxy add-on is deployed on each Amazon EC2 node in the cluster. It maintains network rules on your nodes and enables network communication to your pods.

Container Runtime: The container runtime is responsible for running and managing the containers on the worker node. Kubernetes supports a variety of container runtimes, including Docker, containerd, rkt and CRI-O.

Kubelet: The kubelet is responsible for managing the state of the pods running the containers on the worker node. It ensures that the containers are running, healthy, and have the necessary resources.

What is Pod which runs the containers inside it?

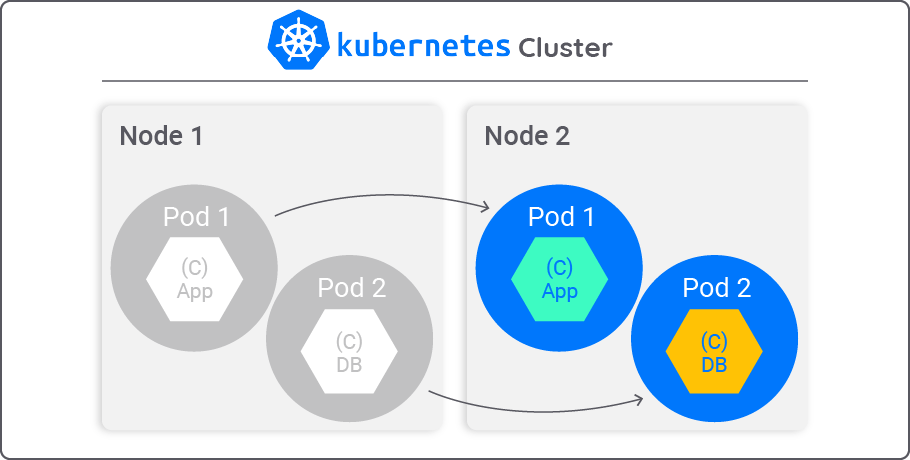

In Kubernetes, a Pod is the smallest and simplest unit in the platform. It represents a single instance of a running process within the cluster, and it can contain one or more containers. Container runtime is the software responsible for running the containers in a pod. A Pod provides a way to encapsulate and manage one or more containers as a single entity.

When you create a Pod, you can specify one or more containers to run inside it. These containers share the same network namespace and can communicate with each other using localhost. They also share the same storage volume, which allows them to share data.

Let's explore Kubernetes Clusters--->

A Kubernetes cluster is a group of one or more physical or virtual machines, called nodes, that are used to deploy and manage containerized applications.

In Kubernetes, several types of clusters can be created, depending on the requirements of your application and infrastructure. Here are some common types of Kubernetes clusters:

Single-node cluster: This type of cluster consists of only one node, and it's typically used for local development and testing purposes.

- Minikube: A popular tool for running a single-node Kubernetes cluster locally for development and testing.

Multi-node cluster: This is the most common type of Kubernetes cluster, and it consists of multiple worker nodes and a master node. The worker nodes are responsible for running the application workloads, while the master node manages the cluster state, scheduling, and orchestration.

Kubeadm: A command-line tool for bootstrapping a multi-node Kubernetes cluster on-premises or in the cloud.

Managed Kubernetes services: Cloud providers such as AWS, Google Cloud, and Microsoft Azure offer managed Kubernetes services that allow you to easily deploy and manage a multi-node Kubernetes cluster.

[Amazon EKS, Azure-AKS, Google-GKE]

High availability (HA) cluster: This type of cluster is designed for production environments that require high availability and fault tolerance. An HA cluster typically consists of multiple master nodes, each running on a separate physical or virtual machine, to ensure that the cluster remains operational even if one of the master nodes fails.

Kubeadm HA: A set of scripts that automate the process of setting up a highly available Kubernetes cluster using Kubeadm.

Kops: A tool that automates the deployment of production-grade Kubernetes clusters on AWS.

Hybrid cluster: A hybrid cluster combines on-premises infrastructure with cloud resources. This type of cluster is useful when you need to deploy applications across multiple environments or when you need to scale your applications across different locations.

Kubefed: A tool that enables you to manage and deploy applications across multiple Kubernetes clusters running in different environments, including on-premises and the cloud.

Kubernetes Anywhere: A tool that allows you to deploy a Kubernetes cluster on any infrastructure, including on-premises, in the cloud, or at the edge.

Installation and setup of Kubernetes

Installation and setup of Kubernetes can vary depending on the chosen deployment option, whether it's on a cloud platform, on-premises, or locally.

Here's an overview of the different installation options and some popular tools for each:

Cloud Providers:

Amazon Web Services (AWS): Amazon Elastic Kubernetes Service (EKS)

Microsoft Azure: Azure Kubernetes Service (AKS)

Creating an AKS cluster by using the Azure portal - Azure Kubernetes Service

Google Cloud Platform (GCP): Google Kubernetes Engine (GKE)

Creating a zonal cluster | Google Kubernetes Engine (GKE) | Google Cloud

On-premises Solutions:

OpenShift: An enterprise Kubernetes platform by Red Hat.

Installing OpenShift | Installing Clusters | OpenShift Container Platform 3.11

Rancher: A popular open-source Kubernetes management platform.

Rancher Docs: Setting up a High-availability RKE Kubernetes Cluster

Mirantis: A cloud-native infrastructure company that offers a Kubernetes solution. Building Certified Kubernetes Cluster On-Premises| Mirantis

Local Development Options:

Minikube: A tool that allows you to run a single-node Kubernetes cluster on your local machine.

Kubeadm: A tool that allows you to run a multi-node Kubernetes cluster on your local machine.

Kind: A tool for running single-node as well as multi-node Kubernetes clusters using Docker containers as nodes on your local machine.

Getting-started-with-kind-creating-a-multi-node-local-kubernetes-cluster Nice blog by Chirag Varshney

Applications deployment on Kubernetes

Deploying applications on Kubernetes involves creating and managing Kubernetes objects using YAML manifests.

YAML manifests are a way to declare and define Kubernetes objects and their configuration in a declarative format. YAML stands for "yet another markup language" or "YAML Ain't Markup Language" and is a human-readable data serialization format. Kubernetes manifests are usually stored in YAML files and include definitions for different types of objects like Pods, Deployment, ReplicaSets, StatefulSet, DaemonSets, PersistentVolume, Service, Namespaces, ConfigMaps & Secrets, Job

These manifests define the desired state of the Kubernetes cluster and the objects running on it. They can be used to create, modify, or delete objects, and Kubernetes will ensure that the actual state matches the desired state specified in the manifest. YAML manifests provide a powerful way to automate the management of Kubernetes objects and ensure consistent and reproducible deployments.

A deployment object is used to define the desired state of an application and manage its lifecycle. A service object is used to provide a stable IP address and DNS name for a set of pods, and it allows applications to communicate with each other within the cluster. A pod is the smallest deployable unit in Kubernetes and represents a single instance of an application.

The syntax for YAML manifests:

apiVersion: @@@@@@

kind: @@@@@@

metadata:

name: @@@@@@

labels:

app: @@@@@@

spec:

containers:

- name: @@@@@@

image: @@@@@@

ports:

- containerPort: @@@@@@

Let's Start with Pod--->

Pods are the fundamental building blocks of the Kubernetes system. They are used to deploy, scale, and manage containerized applications in a cluster.

Sure, here are some examples of Kubernetes objects with their deployments using manifests:

Pod:

A Pod can host a single container or a group of containers that need to "sit closer together".

yamlCopy codeapiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx-container

image: nginx:latest

ports:

- containerPort: 80

Deployment:

The deployment object is used to manage the lifecycle of one or more identical Pods. A Deployment allows you to declaratively manage the desired state of your application, such as the number of replicas, the image to use for the Pods, and the resources required. Declaratively managing the state means specifying the desired end state of an application, rather than describing the steps to reach that state.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-container

image: nginx:latest

ports:

- containerPort: 80

ReplicaSet:

In Kubernetes, Deployments don’t manage Pods directly. That’s the job of the ReplicaSet object. When you create a Deployment in Kubernetes, a ReplicaSet is created automatically. The ReplicaSet ensures that the desired number of replicas (copies) are running at all times by creating or deleting Pods as needed.

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: nginx-replicaset

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-container

image: nginx:latest

ports:

- containerPort: 80

Service:

A Kubernetes Service is a way to access a group of Pods that provide the same functionality. It creates a single, consistent point of entry for clients to access the service, regardless of the location of the Pods.

One of the key benefits of using a Service is that it provides a stable endpoint that doesn't change even if the underlying Pods are recreated or replaced. This makes it much easier to update and maintain the application, as clients don't need to be updated with new IP addresses.

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- name: http

port: 80

targetPort: 80

Note that these examples are just for illustration purposes and may need to be adapted to suit your specific use case.

Once the manifest is created, you can apply it using kubectl apply -f <filename.yml>command. This will create the specified Kubernetes objects and deploy the application on the cluster.

In summary, deploying applications on Kubernetes involves creating and managing Kubernetes objects using YAML manifests, and monitoring and scaling can be done using various tools and built-in features.

Monitoring the applications on Kubernetes

Once you have deployed your application to a Kubernetes cluster, it's important to monitor and scale it to ensure optimal performance and availability.

Monitoring and scaling applications on Kubernetes can be done using various tools, such as Kubectl, Prometheus, Grafana, and more. Kubernetes provides built-in scaling features, such as horizontal pod autoscaling, which automatically adjusts the number of replicas based on CPU utilization or other metrics.

Kubernetes provides several built-in tools for monitoring and scaling applications, including:

kubectl- This command-line tool is used for managing Kubernetes clusters and objects, including deploying applications, scaling resources, and monitoring the status of applications.

To monitor and scale your Kubernetes application, you can use

kubectlto check the status of your application, including the number of pods, their status, and their resource usage. You can also usekubectlto scale up or down the number of replicas for a particular deployment.Here are some Kubectl commands for monitoring and scaling Kubernetes applications:

kubectl get pods: This command is used to get the status of all running pods in the cluster.kubectl logs [pod name]: This command is used to view the logs of a specific pod.kubectl top [pod name]: This command is used to view the resource utilization of a specific pod.kubectl scale [deployment name] --replicas=[number of replicas]: This command is used to scale a deployment to a specified number of replicas.

Prometheus- A popular open-source monitoring and alerting toolkit that collects and stores time-series data from various sources, including Kubernetes, and allows you to visualize and analyze this data.

Prometheus can be used to monitor resource usage, such as CPU and memory, as well as metrics related to the performance of your application, such as request latency and error rates.

Grafana- A popular open-source dashboard and visualization tool that can be used with Prometheus to create custom dashboards and alerts.

You can use Grafana to create custom dashboards that display this data in a way that's easy to understand.

Summary:

In this blog article, we discussed what is Container orchestration and where it is used, then Kubernetes as a popular open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It is used to manage and run large-scale distributed systems, and provides benefits such as scalability, high availability, portability, improved resource utilization, and cost savings.

Kubernetes architecture is composed of a master node and worker nodes, and uses objects and controllers to manage application resources. Installation options include cloud, on-premises, and local, and application deployment is done using YAML manifests. Monitoring and scaling applications on Kubernetes can be achieved with tools such as kubectl, Prometheus, and Grafana. With its many benefits and features, Kubernetes is a powerful tool for managing containerized applications.

I hope you learned something today with me!

Stay tuned for my next blog on "Project - Application Deployment with Kubernetes". I will keep sharing my learnings and knowledge here with you.

Let's learn together! I appreciate any comments or suggestions you may have to improve my learning and blog content.

Thank you,

Chaitannyaa Gaikwad